Why Prompt Engineering Felt Wrong (And What Skills Changed)

Standing room only at TechLawFest 2025. Everyone was learning COSTAR and GCES—popular frameworks for writing better prompts. I sat there feeling anxious. Not because I was struggling to keep up, but because I don't use any of this.

I use AI every day. Big things. Real work. But I've never typed "COSTAR" into a prompt in my life.

The anxious voice in my head kept asking: "Am I doing this wrong? Should I be learning these frameworks like everyone else?"

Turns out, that same month—September 2025—the technology was shifting. Skills were being reverse-engineered, agents were being defined. By October, everything changed.

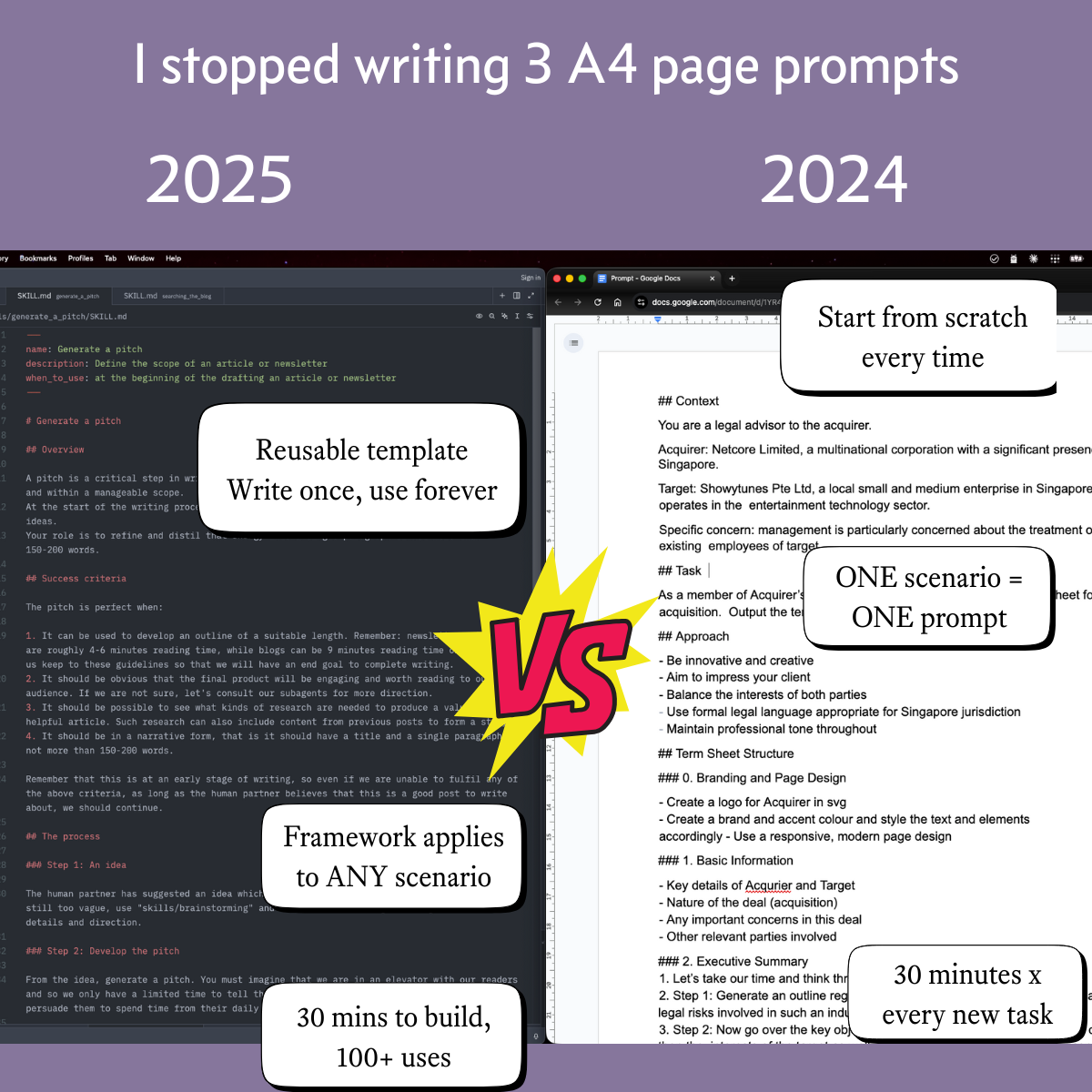

The Year Before

2024 was my rock bottom with prompt engineering. I entered a prompt writing competition. Spent weeks crafting a 3-page prompt for generating M&A term sheets. Six major sections. Step-by-step instructions. GCES framework. Elaborate formatting requirements.

Back in 2023, I even created a set of tutorials on prompt engineering for lawyers—convinced this was the future.

I was so proud of the detail. So convinced it would showcase what I knew.

I got disqualified for missing the deadline. Spent too much time perfecting it.

That 3-page prompt felt excessive even then. But I thought that was how you were supposed to do it. Write the perfect prompt. Specify every step. Make it comprehensive.

What Changed in September

After TechLawFest ended, I returned to my actual work problems. The ones that kept me up at night had nothing to do with writing better prompts:

How do I persist what the agent knows? Every new chat session started from scratch. I'd explain my review criteria again. And again. And again.

How do I ensure the agent follows my workflows precisely? Sometimes it would skip steps. Sometimes it would improvise. I needed reliability, not creativity.

Reliability concerns remain important issues in the legal context.

How do I scale this? Each project needed different workflows. Contract review. Research summaries. Document generation. My prompts were getting longer and longer, trying to cram everything into context.

That's when I found Simon Willison documenting something new—agents that work in loops. GitHub repos exploring persistent skill systems. Tools that could encode workflows once and run them autonomously.

I realized: These systems solve the problems that made prompt engineering feel wrong.

Not "write a better prompt each time" but "encode your judgment once, run it forever."

Now I have a 45-line skill that does what that 3-page prompt tried to do. But it persists. It runs precisely. And I can build a library of these without hitting token limits.

That's the shift. Not "prompt better" but "build systems that persist."

If You Felt Like Prompt Engineering Didn't Fit

Here's what I learned: If you felt like prompt engineering frameworks didn't fit your work, your instinct was right.

Not because prompt engineering is wrong—it still works for chat tools like ChatGPT. But because in September 2025, we got access to something different. Agent skills that encode your judgment as reusable systems, not one-time prompts.

I wrote a long article explaining what changed, why it matters, and what resource-constrained legal teams should do about it. You'll get three things:

1. A working NDA review skill you can adapt for your practice. If you can write a checklist in markdown format, you can build a skill.

2. The economics breakdown. Many vendor tools charge around $50/user/month ($600/year for solo counsel, $6,000/year for a 10-person team). Custom skills cost $20-50/month regardless of team size. I explain when each makes sense.

3. A decision framework to evaluate whether this approach fits your practice. When to use chat tools, vendor tools, or custom skills—including security considerations, time investment, and when vendor tools make more sense than DIY.

Maybe you don't need to learn what everyone else is learning. Maybe your instinct that "this doesn't solve my real problems" was right all along.

Member discussion