October 3 Changed Everything: How One $800 Fine Flipped Singapore's AI Coverage from Adoption to Accountability

The Shift Nobody Saw Coming

On October 3, 2025, a Singapore lawyer was ordered to pay $800 after a junior associate used AI to generate a non-existent case citation. That single sanction changed everything.

I've been writing about AI and legal practice throughout 2025—from Harvey's pricing lessons to Singapore's AI guidelines to prompt engineering workshops. But when I analyzed the year's legal news data, I discovered something I'd missed while covering individual stories: the narrative didn't evolve—it flipped.

Before October 3, legal news coverage of AI was overwhelmingly optimistic—89% of articles focused on training programs, efficiency gains, and innovation opportunities. After October 3, 82% emphasized accountability, professional discipline, and verification duties.

The shift wasn't gradual. It happened in eight weeks.

The Pattern in the Data

I analyzed 27 AI-related legal articles from data.zeeker.sg covering April through December 2025. When I tracked keyword frequency across the timeline, the crossover was striking:

Adoption Keywords (training, innovation, efficiency, opportunity, launch):

- May 2025: 12 mentions across 6 articles

- September 2025: 7 mentions across 3 articles

- October 2025: 8 mentions across 4 articles

Accountability Keywords (accountability, discipline, sanctions, verification, oversight):

- May 2025: 3 mentions across 6 articles

- September 2025: 5 mentions across 3 articles

- October 2025: 18 mentions across 4 articles

In one month, accountability mentions tripled while the article count stayed nearly the same. This wasn't about volume—it was about tone, consequences, and professional fear.

Part 1: What Happened (The Finding)

Phase 1: The Adoption Era (April-September 2025)

Peak Optimism: May 2025

May was the high-water mark for AI enthusiasm. Six articles that month painted a picture of transformation:

- May 8: "Generative AI a top priority for firms" - Survey shows strategic adoption despite privacy concerns

- May 22: "New training programme launched for young lawyers to stay ahead of AI curve" - Structured modules on AI ethics, prompt engineering

- May 23: "Singapore's ADR ecosystem must evolve" - AI-assisted mediation highlighted as innovation

- May 26: "GenAI in resume writing, job assessments: Foul use or fair play?" - Debate on legitimate use vs. misuse

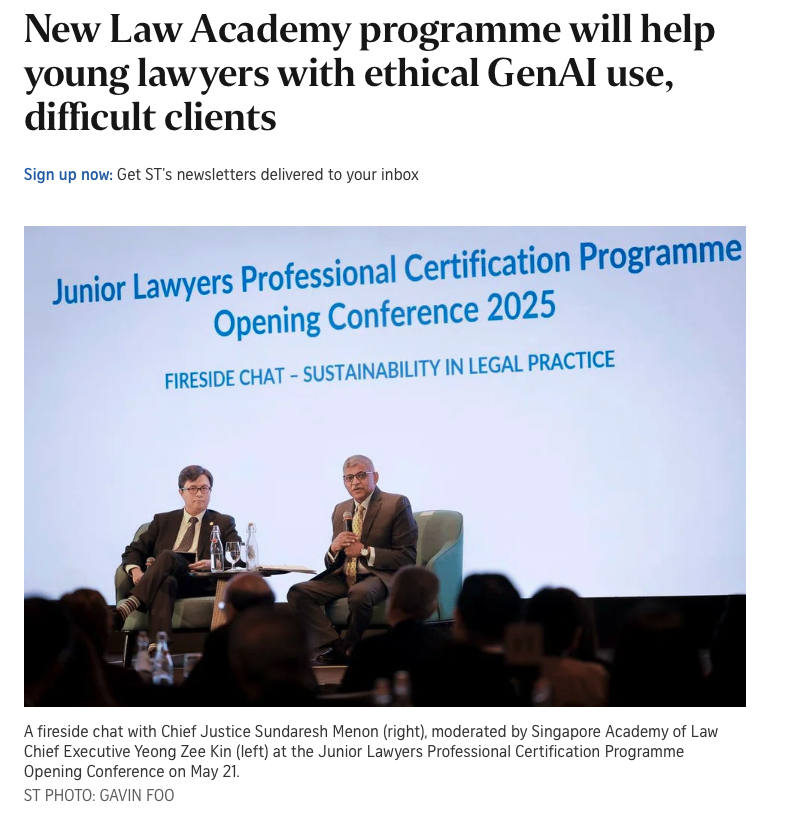

- May 22: "New Law Academy programme will help young lawyers with ethical GenAI use" - Singapore Academy of Law (SAL) launches Junior Lawyers Professional Certification Programme targeting AI literacy

The keywords told the story: training (3 mentions), innovation (2), efficiency (2), launched (3). The tone was overwhelmingly "How do we adopt this?" not "How do we prevent harm?"

Last Hurrah: September 12, 2025

The final major adoption push came at TechLaw.Fest when SAL and IMDA (Infocomm Media Development Authority) launched GPT-Legal Q&A and LawNet 4.0, an AI search engine trained on Singapore judgments and legislation. The article emphasized the tool's ability to "limit hallucinations" and "complement keyword search," but the focus was still on the opportunity, not the risk.

Needing less time for legal research, lawyers will be able to focus on other aspects of case management. Dr Ong said: “Many lawyers have dropped out of the profession because of things like long hours. So being more efficient will hopefully help keep lawyers in the profession.”

Keywords that month: innovation, opportunity (2), launch (2). Accountability mentions were climbing (5 total), but they were still secondary to the dominant narrative: AI was inevitable, and firms should embrace it.

Phase 2: The Inflection Point (October 3, 2025)

The Watershed: $800 and Everything Changes

On October 3, the High Court ordered a lawyer to pay $800 after discovering a junior's AI-generated, non-existent case citation in court papers. I covered the case details when it broke, but what I didn't see at the time was the pattern it would trigger.

The ruling established AI verification as a professional duty—with personal costs orders, public judicial rebuke, and disciplinary consequences. This wasn't a warning. It was a precedent.

The October Spike

Keyword counts that month:

- verification: 4 mentions (up from 0-1 in prior months)

- duty/obligation: 4 mentions

- oversight/supervision: 4 mentions

- accountability: 3 mentions

- dismissal (as in "sackable offense"): 3 mentions

The shift was immediate and total. At the time, I wrote about why October mattered for AI and open source, but I was focused on Hacktoberfest and agent development. I didn't see the accountability avalanche building in the data.

Phase 3: The Accountability Era (October-December 2025)

October 22: Firms Escalate to Dismissal Policies

"Breaches of AI policy could be a sackable offence at some Singapore law firms"

Following the High Court finding, firms escalated from guidance to discipline. The article was explicit: GenAI breaches now range from restricted tool access to dismissal. Lead lawyers remain personally accountable for AI-assisted work.

October 29: SAL Shifts Tone from Enablement to Control

"Use of Gen AI in legal practice must be balanced with careful oversight: SAL Forum"

SAL's tone shifted from enablement (May's training programs) to control. The guidance: adopt explicit internal policies including sanctions, safeguard confidentiality, train lawyers in verification. The message was clear—the October 3 incident had changed the playbook.

November 6: Courts Continue Enforcement

"Two lawyers rapped over 'entirely fictitious' AI-generated citations"

It happened again. Justice S Mohan rebuked two lawyers for citing "entirely fictitious" cases (likely AI hallucinations) in closing submissions. Sanctions were pending. The pattern was now established: courts would not tolerate unverified AI output.

December 11: Year-End Consolidation

Two articles from local Chinese newspapers:

- "Singapore Courts: Cases of AI Fabrication a 'Misuse of Technology'" - Courts made plain that lawyers remain personally liable, AI output must be verified

- "Lawyers: AI is a supplementary tool and not a replacement for a lawyer's judgement" - Firms responding with on-prem tools, supervised workflows, audit trails

By year's end, November and December combined for 7 articles—5 focused on accountability, discipline, and professional duties. The era of unqualified AI enthusiasm was over.

What This Means for You

I've written throughout 2025 about what AI guidelines miss for solo counsel, lessons from Harvey's pricing, and the difference between busy and productive AI use. But the keyword frequency analysis reveals something those individual posts couldn't capture: the speed and totality of the narrative flip.

The Pattern Shows Three Things Previous Coverage Missed:

1. The shift happened in one month, not gradually

May to September showed steady adoption rhetoric. October's keyword spike wasn't incremental—it was a 3x jump in accountability language while article counts stayed flat. One case rewrote six months of optimism in eight weeks.

2. Media coverage became enforcement documentation

Before October: "How to use AI safely." After October: "Firms implementing dismissal policies," "Courts issuing sanctions," "Verification now mandatory." The articles weren't just reporting consequences—they were cataloging them as precedent.

3. Solo counsel face compounding disadvantages

The data shows firms responded with infrastructure: two-tier oversight, restricted tool access, audit trails. As I wrote when the case broke, solo counsel don't have junior lawyers to supervise or compliance teams to build verification protocols. You're both the user and the oversight system.

The Trade-Off Solo Counsel Actually Face

The data shows firms responded with infrastructure: two-tier oversight, restricted tool access, audit trails. Solo practitioners don't have these options. You can't implement a two-tier review system when you're the only tier.

What the keyword frequency timeline reveals isn't just that accountability became mandatory—it's how quickly the shift happened. Solo counsel who adopted AI in May under "innovation and efficiency" framing now operate under "verification and oversight" requirements with no transition period.

The honest question: Is the productivity gain still worth it when you add verification time? The answer depends on your practice, your risk tolerance, and whether you have informal peer review networks. What's clear from the data is that 2025 removed the option to use AI without addressing this trade-off.

The keyword timeline proves what many suspected: 2025 was the year Singapore's legal profession learned that AI isn't just a productivity tool—it's a liability risk. And the shift happened faster than anyone expected.

Two ways to read the rest of this post:

📊 For practitioners: Skip to "Why This Matters" below for operational takeaways, then review "What's Next?" for ongoing monitoring

🔧 For legal technologists and data enthusiasts: Continue to Part 2 for full methodology, SQL queries, and replication instructions

Part 2: How I Found This (The Method)

This analysis only became possible because I've spent the past year building data.zeeker.sg—a structured, queryable database of Singapore legal news. Here's how you can replicate this kind of analysis yourself.

💻 Step 1: The Wrong First Query

I started with what seemed obvious:

SELECT

strftime('%Y-%m', date) as month,

COUNT(*) as ai_articles

FROM headlines

WHERE summary LIKE '%AI%'

AND date >= '2025-04-01'

GROUP BY month;Problem: This caught false positives like "flail," "Thailand," "available."

Result: The query returned hundreds of matches because "%AI%" matches those two letters anywhere in the text—even inside other words. Since the database already contains legal news, finding hundreds of "AI" matches revealed the search was too broad, not that AI appeared in hundreds of articles.

Lesson learned: You can't just search for "AI" anywhere in text without matching unrelated words. Searching for "%AI%" in unstructured text is too broad—you need explicit terms instead.

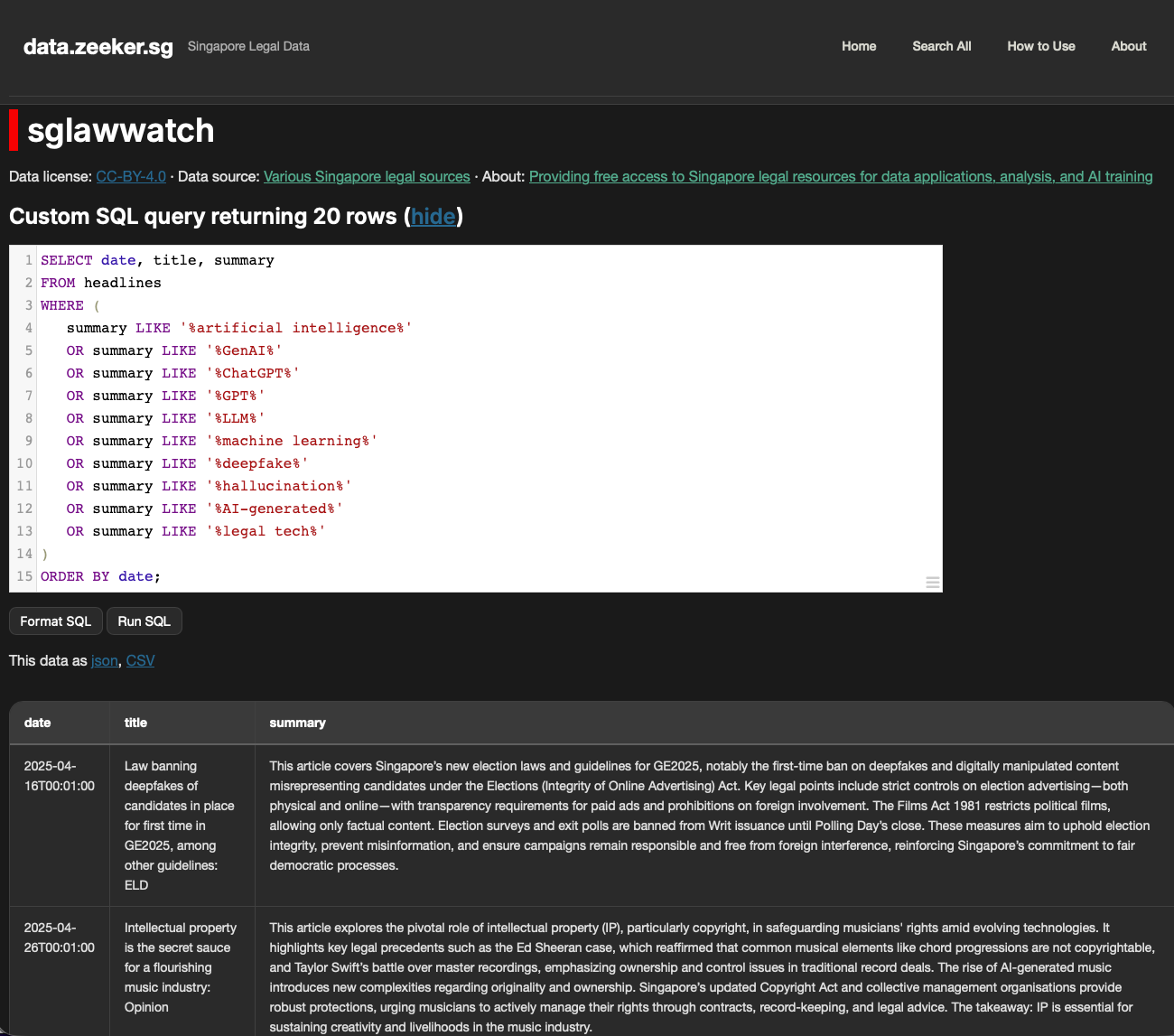

Step 2: Expanding the Net

I revised to include explicit AI-related terms:

WHERE (

summary LIKE '%artificial intelligence%'

OR summary LIKE '%GenAI%'

OR summary LIKE '%ChatGPT%'

OR summary LIKE '%GPT%'

OR summary LIKE '%LLM%'

OR summary LIKE '%machine learning%'

OR summary LIKE '%deepfake%'

OR summary LIKE '%hallucination%'

OR summary LIKE '%AI-generated%'

OR summary LIKE '%legal tech%'

)Result: 27 articles across 8 months.

Monthly breakdown:

- Apr: 2, May: 6, Jun: 1, Jul: 2, Aug: 2, Sep: 3, Oct: 4, Nov: 5, Dec: 2

In the previous iteration, I only searched for "artificial intelligence". However, this doesn't help because AI related news, can happen in a variety of ways. (For example, "ChatGPT" isn't always associated with "artificial intelligence" but it's still relevant for my analysis.)

An expanded net garnered more articles. This showed volume increased slightly in Q4 (11 articles) vs Q2 (9 articles), but nothing dramatic. The story wasn't in the count.

Step 3: Reading for Tone (Manual Classification)

I exported all 27 articles with full summaries:

SELECT date, title, summary

FROM headlines

WHERE [same AI terms]

ORDER BY date;

Then I read each one and asked: Is this about adoption/opportunity or accountability/consequences?

Classification criteria I used:

- Adoption: Articles emphasizing training programs, efficiency gains, innovation opportunities, tool launches, strategic adoption

- Accountability: Articles about professional discipline, court sanctions, verification duties, firm policies on dismissals, mandatory oversight

- Edge cases: Articles covering both aspects were classified based on dominant tone and headline framing

For example, articles about AI training in response to the October 3 fine were classified as "Accountability" (reactive, not proactive enablement).

Results:

Before October 3 (15 articles):

- Adoption-focused: 13 articles (87%)

- Accountability-focused: 2 articles (13%)

After October 3 (12 articles):

- Adoption-focused: 2 articles (17%)

- Accountability-focused: 10 articles (83%)

The flip was real. But I wanted to prove it with data, not just my reading. So I went back to the articles to identify which specific keywords appeared in each group.

Step 4: Keyword Frequency Analysis (The Hook Graphic)

From reading the 27 articles in Step 3, I noticed specific words appeared repeatedly in each category:

Adoption articles used: training, innovation, efficiency, opportunity, launch Accountability articles used: accountability, discipline, sanctions, verification, oversight, misuse, dismissal

To quantify the tone shift, I counted these keywords in the article text (not summaries) month by month:

SELECT

strftime('%Y-%m', date) as month,

-- Adoption keywords

SUM(CASE WHEN text LIKE '%training%' OR text LIKE '%programme%' THEN 1 ELSE 0 END) as training,

SUM(CASE WHEN text LIKE '%innovation%' THEN 1 ELSE 0 END) as innovation,

SUM(CASE WHEN text LIKE '%efficiency%' THEN 1 ELSE 0 END) as efficiency,

SUM(CASE WHEN text LIKE '%launched%' THEN 1 ELSE 0 END) as launch,

-- Accountability keywords

SUM(CASE WHEN text LIKE '%accountability%' THEN 1 ELSE 0 END) as accountability,

SUM(CASE WHEN text LIKE '%discipline%' THEN 1 ELSE 0 END) as discipline,

SUM(CASE WHEN text LIKE '%verification%' THEN 1 ELSE 0 END) as verification,

SUM(CASE WHEN text LIKE '%oversight%' THEN 1 ELSE 0 END) as oversight,

SUM(CASE WHEN text LIKE '%sack%' OR text LIKE '%dismiss%' THEN 1 ELSE 0 END) as dismissal

FROM headlines

WHERE [AI terms]

GROUP BY month;Note on counting: These numbers represent articles containing the keyword, not total occurrences. An article mentioning "verification" three times counts as 1. This approach captures how many pieces of coverage emphasized that theme rather than raw word frequency.

The results:

| Month | Training | Innovation | Launch | Verification | Oversight | Dismissal |

|---|---|---|---|---|---|---|

| May | 3 | 2 | 3 | 0 | 0 | 0 |

| Sep | 2 | 1 | 2 | 1 | 1 | 0 |

| Oct | 3 | 1 | 1 | 4 | 4 | 3 |

| Nov | 3 | 1 | 3 | 0 | 2 | 2 |

October was the spike. Verification went from 0-1 mentions to 4. Oversight jumped to 4. Dismissal appeared for the first time (3 mentions).

When I summed "adoption cluster" vs "accountability cluster":

- May: Adoption 12, Accountability 3

- September: Adoption 7, Accountability 5

- October: Adoption 8, Accountability 18

The crossover was undeniable.

What You Can Do With This

If you have access to structured legal data (your own firm's case notes, regulatory filings, news archives), you can run this same analysis:

- Start broad, then refine - Your first query will miss things. Iterate.

- Volume ≠ Story - Sometimes the shift is tone, not count.

- Manual classification is essential - SQL finds patterns, but you need to read to understand context.

- Keyword frequency quantifies qualitative shifts - Track specific terms over time to prove what you're seeing.

Try it yourself: Visit data.zeeker.sg, click "SQL Query," and paste the queries from this post. My database is open, free, and structured for exactly this kind of exploration.

Using data.zeeker.sg for Legal News Monitoring

Beyond this analysis, you can use data.zeeker.sg to track legal developments in your practice areas:

Search by topic or keyword:

SELECT date, title, url

FROM headlines

WHERE summary LIKE '%compliance%'

OR summary LIKE '%anti-money laundering%'

ORDER BY date DESC

LIMIT 20;Track coverage trends in your sector:

SELECT strftime('%Y-%m', date) as month,

COUNT(*) as articles

FROM headlines

WHERE summary LIKE '%corporate governance%'

AND date >= '2025-01-01'

GROUP BY month;Monitor enforcement patterns:

SELECT date, title, summary

FROM headlines

WHERE (summary LIKE '%fine%' OR summary LIKE '%sanction%')

AND summary LIKE '%MAS%' -- Monetary Authority of Singapore

ORDER BY date DESC;Find related cases for research:

SELECT title, date, url

FROM headlines

WHERE text LIKE '%breach of fiduciary%'

AND date >= '2024-01-01'

ORDER BY date DESC;The database contains structured summaries of Singapore legal news, updated regularly. Use it to stay current on regulatory developments, track enforcement trends, or research coverage patterns in your areas of practice.

Why This Matters

The shift from adoption to accountability happened faster than anyone expected. One $800 fine in October rewrote the narrative that six months of training programs had built.

For solo counsels and small teams, the lesson is operational: verification isn't optional anymore. Courts expect it. Firms are enforcing it. Clients will demand it.

For legal data nerds (like me), this proves something I've been exploring all year: structured, queryable legal information reveals patterns invisible in traditional research. The story was always there in the 27 articles. It just took SQL and a timeline to see it.

And for anyone tracking the broader AI shift—from prompt engineering workshops that felt wrong to building agent-friendly tools to understanding what actually creates value in AI work—this keyword timeline captures the moment legal practice realized the technology had already changed the rules.

What's Next?

I'm continuing to track AI coverage into 2026. If you want to explore this data yourself or suggest other patterns to investigate, the database is open: data.zeeker.sg

And if you found this analysis useful, consider what other questions structured legal data could answer:

- How did scam enforcement rhetoric change month-by-month in 2025?

- What was the media timeline around the $3B money laundering case?

- When did "compliance" language spike in corporate governance coverage?

- How did AI performance benchmarks shift beyond just headline numbers?

The data is there. The tools are there. Your insights are waiting.

Behind the Analysis

All the background work for this post—discussion notes, data files, SQL queries, chart generation scripts, and research documentation—is available in the GitHub repository for this blog.

The repository shows how the analytical approach evolved through four iterations:

- Initial narrow searches that missed most coverage

- Expanding to capture more AI-related terms

- Manual classification to identify tone shifts

- Keyword frequency analysis to quantify the pattern

If you want to replicate this analysis, understand the methodology in detail, or see how the thinking evolved, everything is documented there.

Member discussion